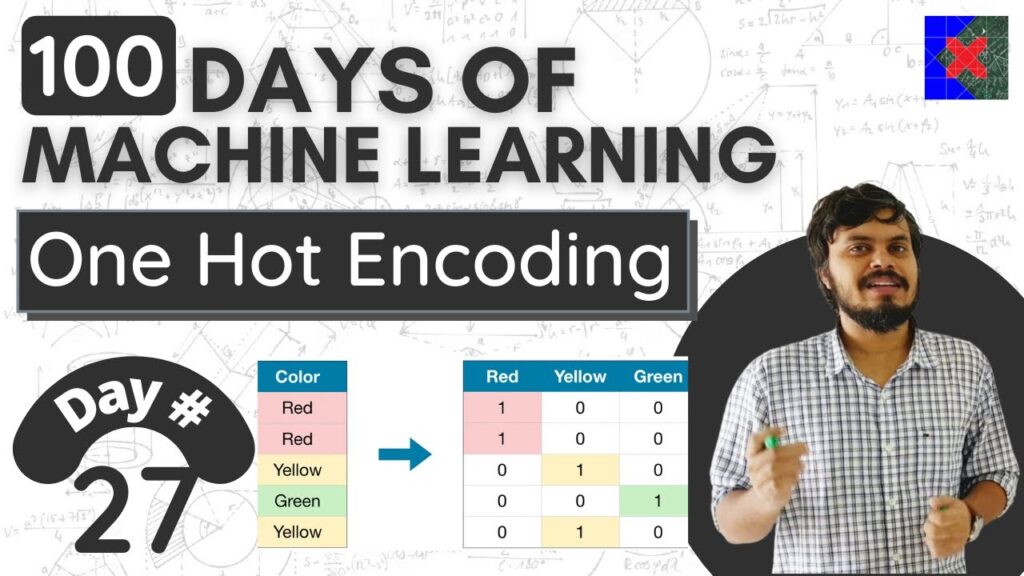

One Hot Encoding Explained: Handling Categorical Data with Machine Learning

Machine learning has revolutionized the way we extract valuable insights from vast datasets. It has become an indispensable tool in various fields, from finance to healthcare. However, one challenge that data scientists often face is how to handle categorical data, such as gender, country, or product type, in machine learning models. This is where techniques like one hot encoding come into play. In this article, we will explore the concept of one hot encoding and understand its significance in handling categorical data.

But before we dive into the specifics, let’s take a step back and consider why categorical data requires special treatment. Machine learning models primarily rely on mathematical operations, and these operations work best with numerical data. Therefore, categorical data needs to be transformed into a numerical format that can be easily understood by these models.

This is where one hot encoding comes in. One hot encoding is a technique used to convert categorical data into a binary representation, allowing machine learning models to process it effectively. It works by creating dummy variables for each category in a categorical feature.

Let’s take a concrete example to better understand how one hot encoding works. Suppose we have a dataset containing information about different fruits, including their type (apple, banana, orange) and color (red, yellow, orange). Here’s what the dataset might look like:

“`

| Fruit | Type | Color |

—————————–

| Apple | Apple | Red |

| Orange | Orange | Orange |

| Banana | Banana | Yellow |

“`

To apply one hot encoding, we will create a binary matrix where each column represents a unique category in a feature. In our example, the “Type” column will be transformed into three separate columns: “Apple,” “Orange,” and “Banana.” Similarly, the “Color” column will be transformed into three columns: “Red,” “Yellow,” and “Orange.”

“`

| Fruit | Apple | Orange | Banana | Red | Yellow | Orange |

——————————————————————

| Apple | 1 | 0 | 0 | 1 | 0 | 0 |

| Orange | 0 | 1 | 0 | 0 | 0 | 1 |

| Banana | 0 | 0 | 1 | 0 | 1 | 0 |

“`

By using one hot encoding, we have transformed the categorical features into a numerical format that can be easily interpreted by machine learning models. Each column represents a category, and if a specific instance belongs to that category, the corresponding column will have a value of 1; otherwise, it will have a value of 0.

Now, you may be wondering why we need to go through this extra step of one hot encoding. The answer lies in the fact that many machine learning algorithms treat categorical data as ordinal, implying an order or ranking, which may not necessarily be the case. By using one hot encoding, we eliminate any ordinal assumptions and ensure that each category is considered equally.

When it comes to implementing one hot encoding in Python, several libraries, such as scikit-learn, offer convenient functions. In fact, scikit-learn provides a OneHotEncoder class that allows us to perform one hot encoding effortlessly.

To further improve our understanding and implementation of one hot encoding, I came across a fantastic video tutorial on YouTube titled “Handling Machine | One Hot Encoding | Handling Categorical Data | Day 27 | 100 Days of Machine Learning.” This video provides a step-by-step explanation of the concept and demonstrates how to implement one hot encoding using Python and the pandas library.

In the video, the instructor begins by explaining the significance of one hot encoding in machine learning and its role in handling categorical data. They emphasize the importance of transforming categorical features into a numerical format for machine learning algorithms to process effectively. The instructor then proceeds to demonstrate how to implement one hot encoding in Python, using the pandas library’s get_dummies function. They provide clear and concise code examples, making it easy for even beginners to follow along.

Throughout the video, the instructor also highlights some potential challenges and considerations to keep in mind while implementing one hot encoding. They discuss strategies for dealing with missing values, handling unseen categories in test data, and avoiding the “dummy variable trap,” a common pitfall associated with one hot encoding.

Overall, the video on “Handling Machine | One Hot Encoding | Handling Categorical Data | Day 27 | 100 Days of Machine Learning” serves as an excellent resource for anyone looking to understand and implement one hot encoding in their machine learning projects. It provides a comprehensive overview of the concept and offers practical insights into its application.

In conclusion, when it comes to handling categorical data in machine learning, one hot encoding is a crucial technique that allows us to effectively preprocess and transform such data into a format that machine learning models can interpret. By converting categorical features into a binary representation, one hot encoding eliminates any ordinal assumptions and ensures each category is treated equally. So, whether you’re a data scientist or a machine learning enthusiast, learning how to implement one hot encoding is a valuable skill that will undoubtedly enhance your data analysis capabilities.

Handling Machine

Hot Encoding: Categorical Data Handling | Day 27 of 100 Days of Machine Learning | Navigating Machine Algorithms